I think that you’ll agree that 48,000 is a lot of people. Nearly enough to fill St James’ Park (52,405). A LOT of people, but not as many as 130,000, which would overflow the Camp Nou and could make a substantial crowd even in the interestingly named Rugrado 1st of May Stadium in Pyongyang (150,000).

Just as Shaw said that the English invented cricket to give them some idea of eternity and geography is measured in units the size of Wales, so football crowds are useful in helping picture volumes of people.

So, does it matter if austerity killed a Rugrado’s worth of people from 2012 to 2017 or if it was only the size of Newcastle United’s crowd? Either would be appalling. But perhaps because it is so damning to make either claim, as the Institute for Public Policy Research (IPPR) did in its recent report “Ending the Blame Game: The Case for a New Approach to Public Health and Prevention”, it seems doubly important to be accurate in doing so.

I’m not sure I agree with the premise of the IPPR’s title, though there is plenty in the report with which I concur, both through hard evidence and subjective experience. Yet I can’t get past that headline about deaths, because it is simply wrong.

The report itself makes the 130,000 deaths claim on page 6, but provides no citation or data source, stating simply that the estimate was made on the basis of extrapolating the trend for “deaths attributable to a preventable risk factor” from 1990 to 2012 then subtracting the extrapolated from observed numbers in subsequent years.

The term “deaths attributable to a preventable risk factor” is not a standard definition. It doesn’t even appear as a phrase in a Google search. However, the report’s summary states: “Over half of the disease burden in England is deemed preventable, with one in five deaths attributed to causes that could have been avoided.” This is more familiar. A figure of 1 in 5 is broadly in line with the definition of “mortality from causes considered preventable”, used by Public Health England in its Public Health Profiles. Eurostat explains the concept of amenable and preventable deaths here, pointing out both that these definitions overlap and that around 1.2 million deaths were either preventable or amenable in the EU in 2015, of a total of over 5.2 million – so again, about 1 in 5.

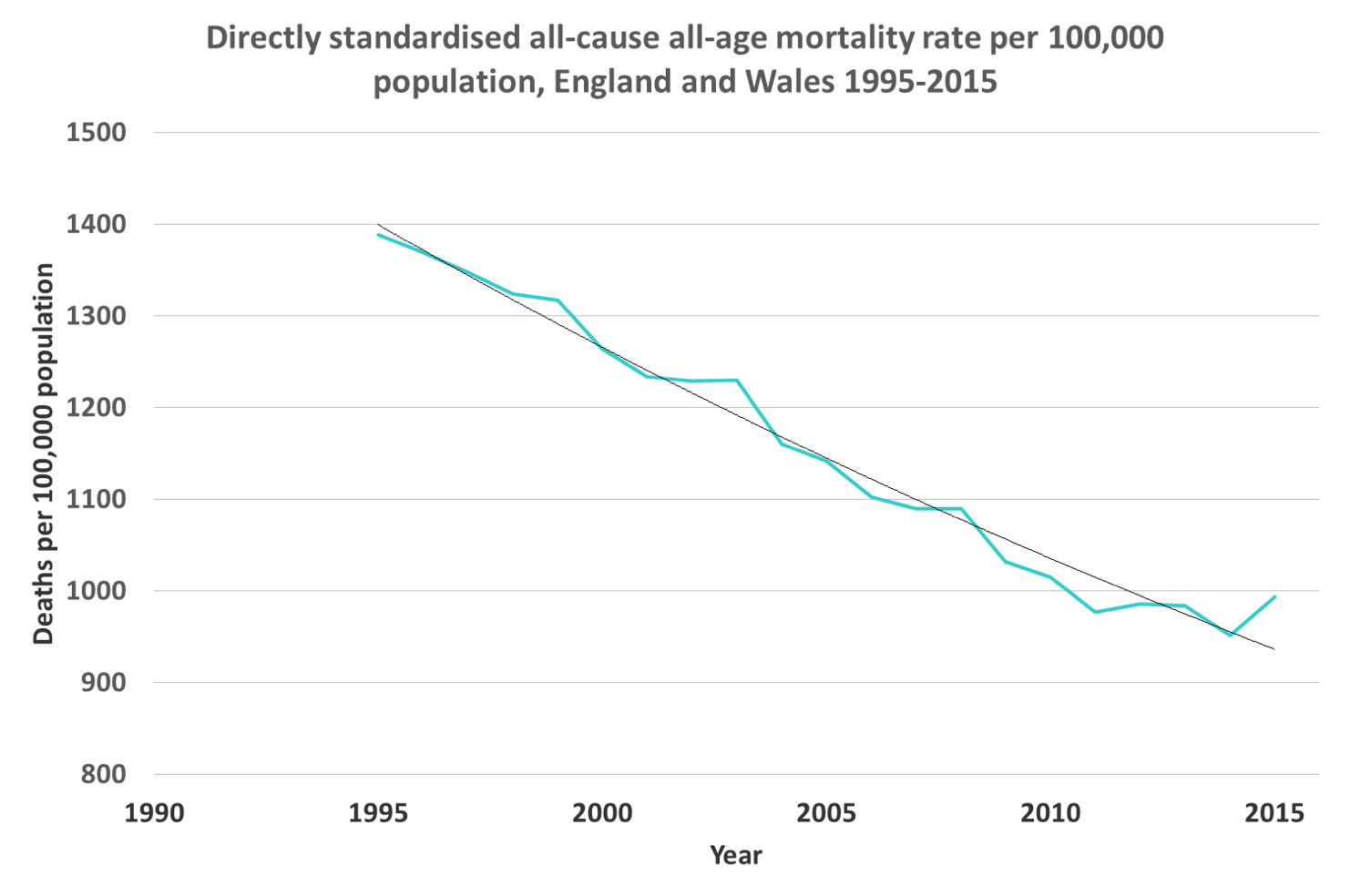

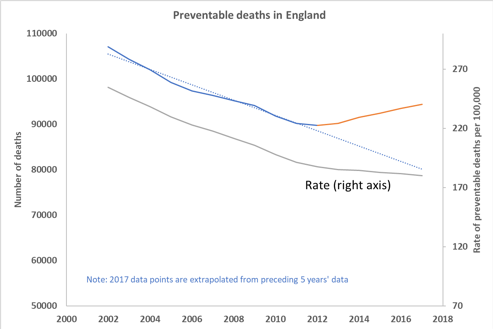

Taking PHEs numbers from its Fingertips website, we can crudely replicate the IPPR’s stated extrapolation – and here I use the word ‘crude’ advisedly, as we are using crude numbers rather than standardising and re-applying rates to population estimates. The trend looks like this:

For clarity and to allow replication, the numbers I have used here are taken from the 3-year rolling data and, rather than use individual year data, I have divided the 3-year aggregates by 3 and plotted them against the central year, thereby smoothing the curves.

I have illustrated both the crude numbers (upper line, left axis) and the rate (lower line, right axis) as this neatly illustrates how the trend of crude deaths has the effect of exaggerating the reversal of preventable decline. The pattern appears in both but is much smaller than crude figures might imply and, in part, reflects the increase in older population projected for this decade.

The dotted line indicates my extrapolation of crude number data for 1990-2012. For both crude and rate data I have taken a linear extrapolation of the preceding 5 years’ data to derive assumed figures for 2017.

Subtracting the extrapolated downward trend from the actual observations (plus that additional point for 2017) yields a total of ‘excess deaths’ of roughly 48,500.

Bearing in mind that crude deaths exaggerate the actual effect because of demographic shift, the true number is necessarily smaller than this, though it is difficult to say by how much. It is probably fair to say that 48,500 is more likely to be the upper end of the range or confidence interval rather than a central estimate.

In summary, I would say that the excess of deaths over trend is real but is unlikely to have totalled more than the capacity of St James’ Park.

Facts matter, and exaggeration undermines the objective force of our arguments. And I hate the fact that by saying “it was fewer than a football stadium’s worth” it makes me sound as if I am playing down just how disgraceful that number actually is. But, as far as I can see, 130,00 is simply wrong, and the IPPR should either explain its reasoning properly or withdraw the claim.

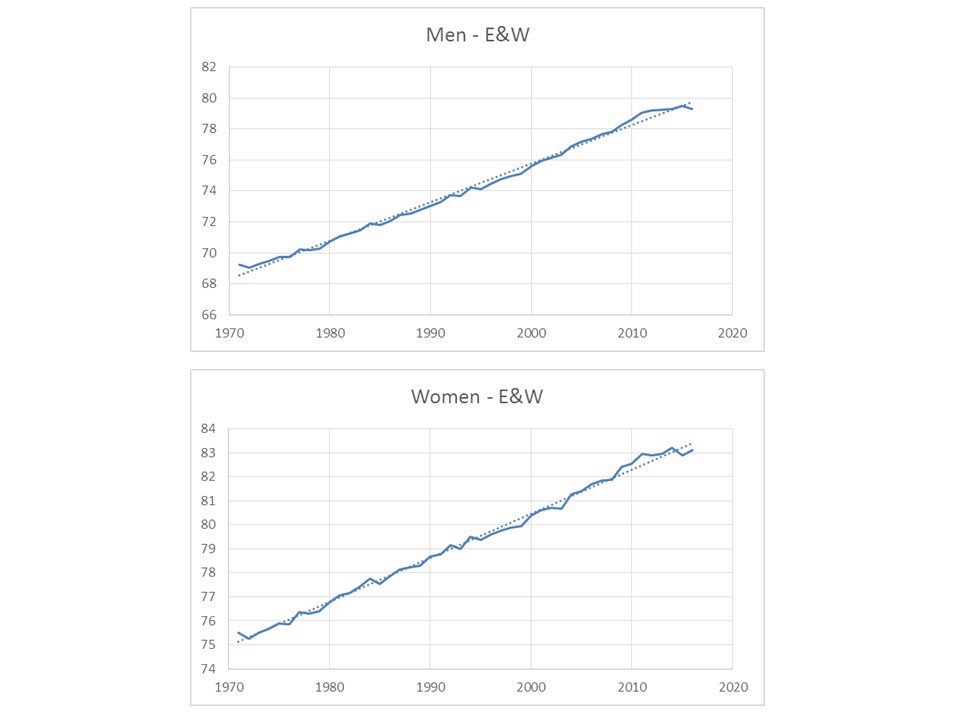

It beggars belief that the arrival of the NHS had a negative effect on health, wellbeing and life expectancy. I don’t believe it and neither, I would guess, do you. On the other hand, this illustrates a key message about the relationship between the provision of care and mortality – it has far less effect than most people, including Watkins et al, think.

It beggars belief that the arrival of the NHS had a negative effect on health, wellbeing and life expectancy. I don’t believe it and neither, I would guess, do you. On the other hand, this illustrates a key message about the relationship between the provision of care and mortality – it has far less effect than most people, including Watkins et al, think.

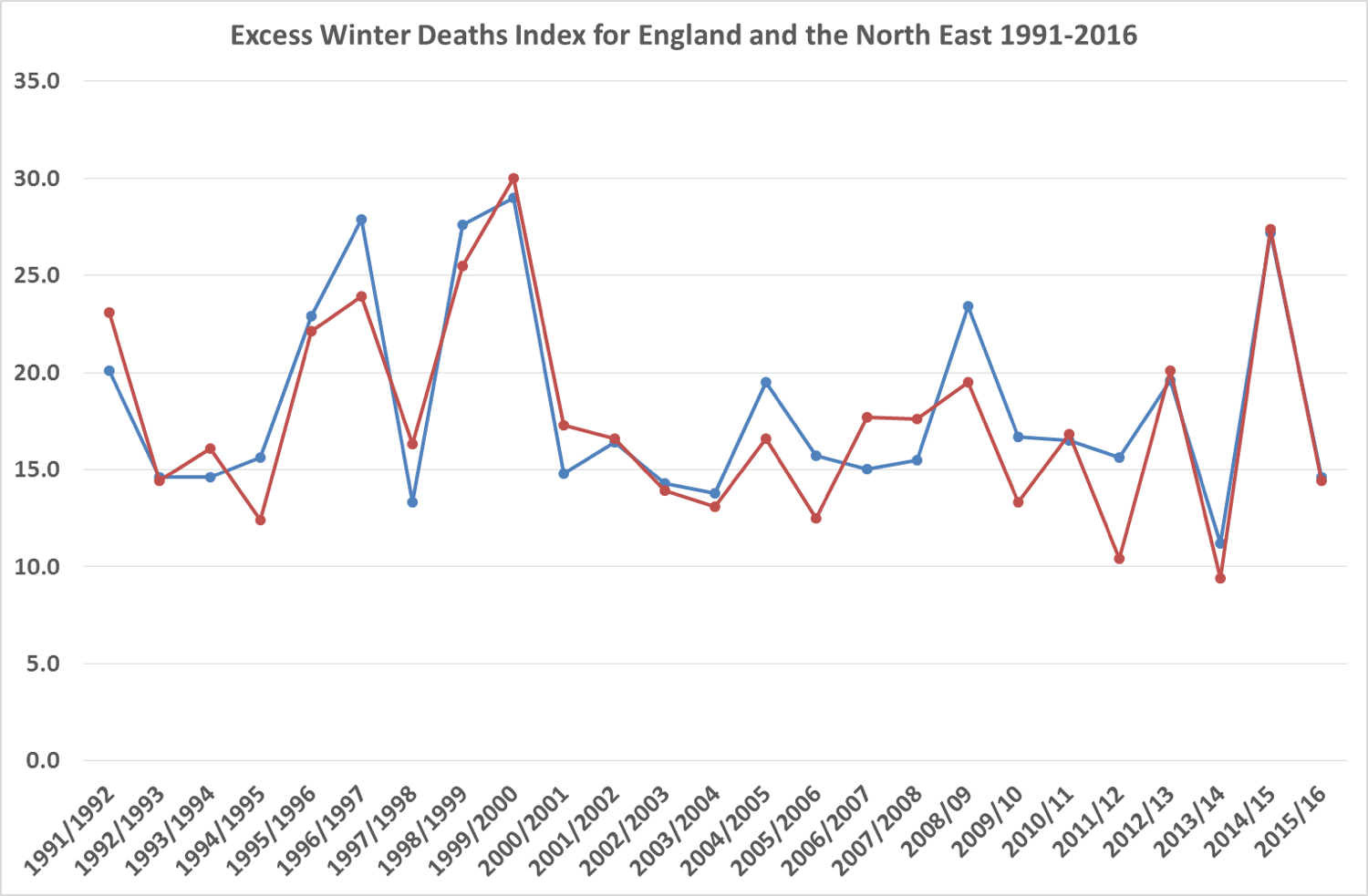

It is worth noting here also that the excess of winter deaths in 2014-15 is not unprecedented, but also occurred as similar levels in the 1990s.

It is worth noting here also that the excess of winter deaths in 2014-15 is not unprecedented, but also occurred as similar levels in the 1990s.